How to backup and auto-update Unraid Docker containers in Komodo?

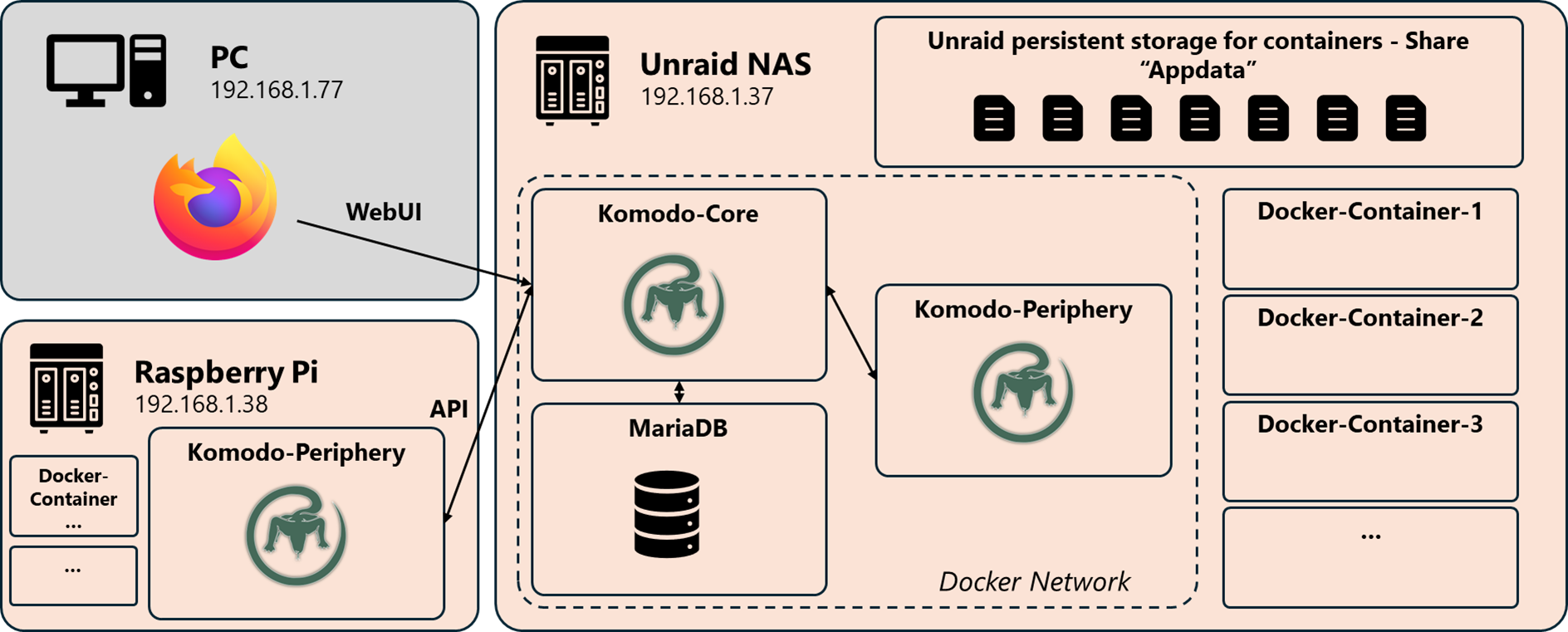

I have been using Unraid for the last 3 years and I absolutely love it. It allowed me to get a grasp of the self-hosting world. I fell into the rabbit-hole and I have learned a lot. I have learned so much that I started to feel limited by Unraid Docker management system. I wanted to get my hands on a solution allowing a better integration with docker-compose and different kind of CI/CD automation. That's when I heard about Komodo.

Komodo is a fantastic opensource project to manage infrastructure and deployment workflows across multiple servers. It offers a web-UI platform to handle container monitoring, container management, and automated deployments... I highly recommend it even if I have not tested other container management platforms like Portainer, Dockge...

Having decided to move towards Komodo to manage my docker containers, I followed the great blog article from foxxmd: https://blog.foxxmd.dev/posts/migrating-to-komodo/. I migrated all my docker containers templates from Unraid to docker-compose. It was a pain but I finally succeeded !

Missing features I need to recreate

However, there were functionalities I needed to recreate to keep my previous setup working as is:

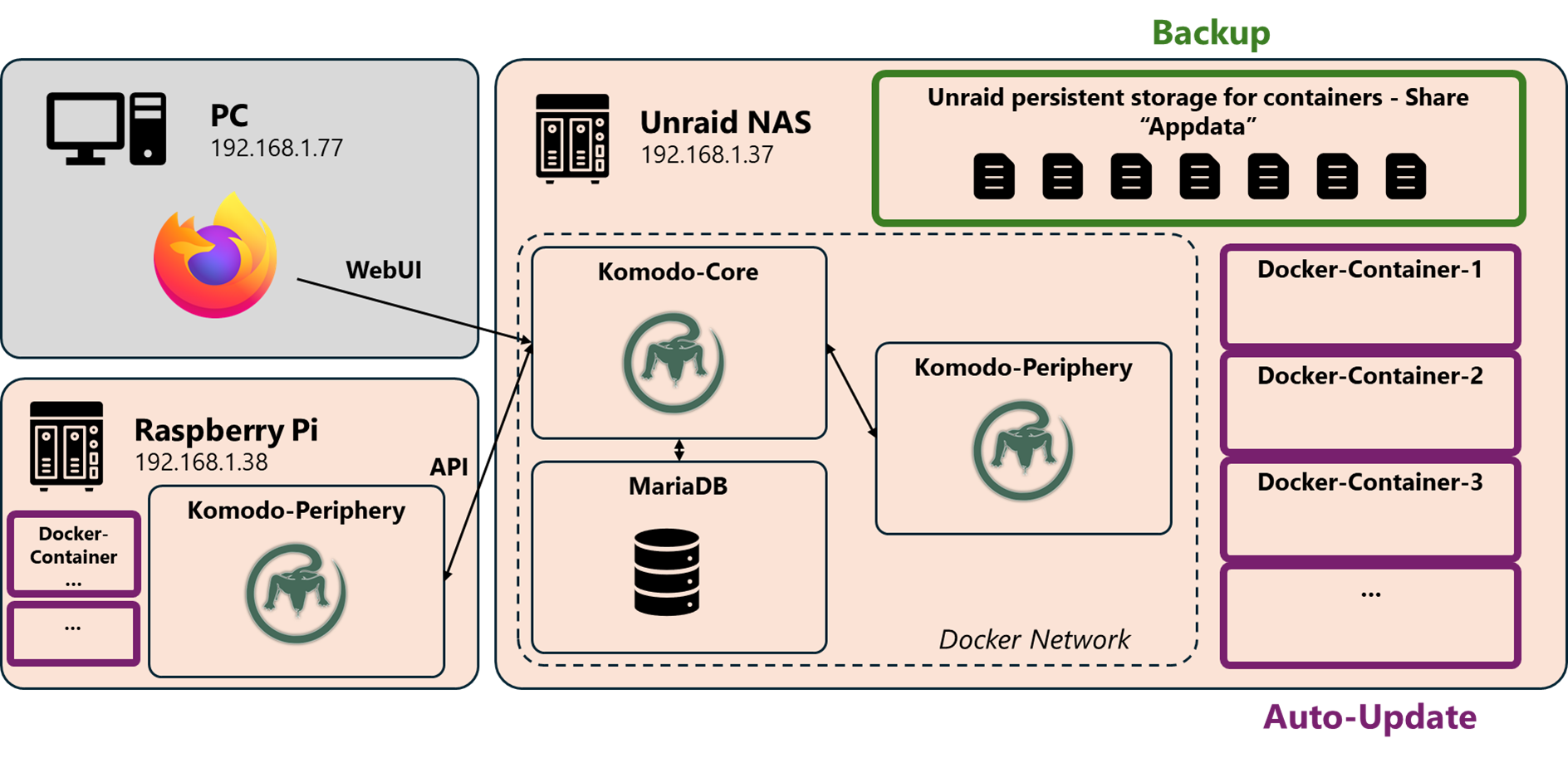

- I used to auto-update my docker containers with Unraid CA Auto Update Applications plugin. Basically, it provided an update on a schedule:

docker compose pullfollowed by adocker compose up -d --force-recreatecommand. - I also used to auto-backup my container persistent configuration files in the appdata share

/mnt/user/appdatawith AppData Backup plugin. Basically, it created an archive containing a copy of a/mnt/user/appdatawhile keeping permissions intact. - We also know that backing up database containers' files should be either done using DB dumps or by stopping a container. If we don't, we risk having a corrupted backup due to pending write calls... So while we are at it, the backup and auto-update process should account for this. Backups should only be performed when all containers are stopped.

Komodo is perfectly capable of updating containers. It has built-in features to check for image updates and to redeploy these when required. We just have to configure it properly to recreate the schedule from Unraid CA Auto Update Applications.

To create backups of /mnt/user/appdata I ended up choosing borgmatic. It's a small container image running borg-backup. You'll find the documentation here. It's quite simple: you provide the container with a yaml configuration file for configuring the source, the destination, the retention policy... and a CRON file for the backup frequency.

Backing up containers with Komodo and Borg

Here is the backup process I setup:

- We deploy borgmatic container image.

- On a schedule provided by borgmatic CRON file, the backup launches.

- Borgmatic calls Komodo API to stop all the containers to backup which are tagged as auto-stop.

- Borgmatic backups appdata files to another share

/mnt/user/Backups. Ideally on the array rather than on the cache drive... I personally also send all of the backup (encrypted) to AWS to follow proper 3-2-1 backup strategy. - Borgmatic calls Komodo API to start all the containers tagged as auto-start to restore the services.

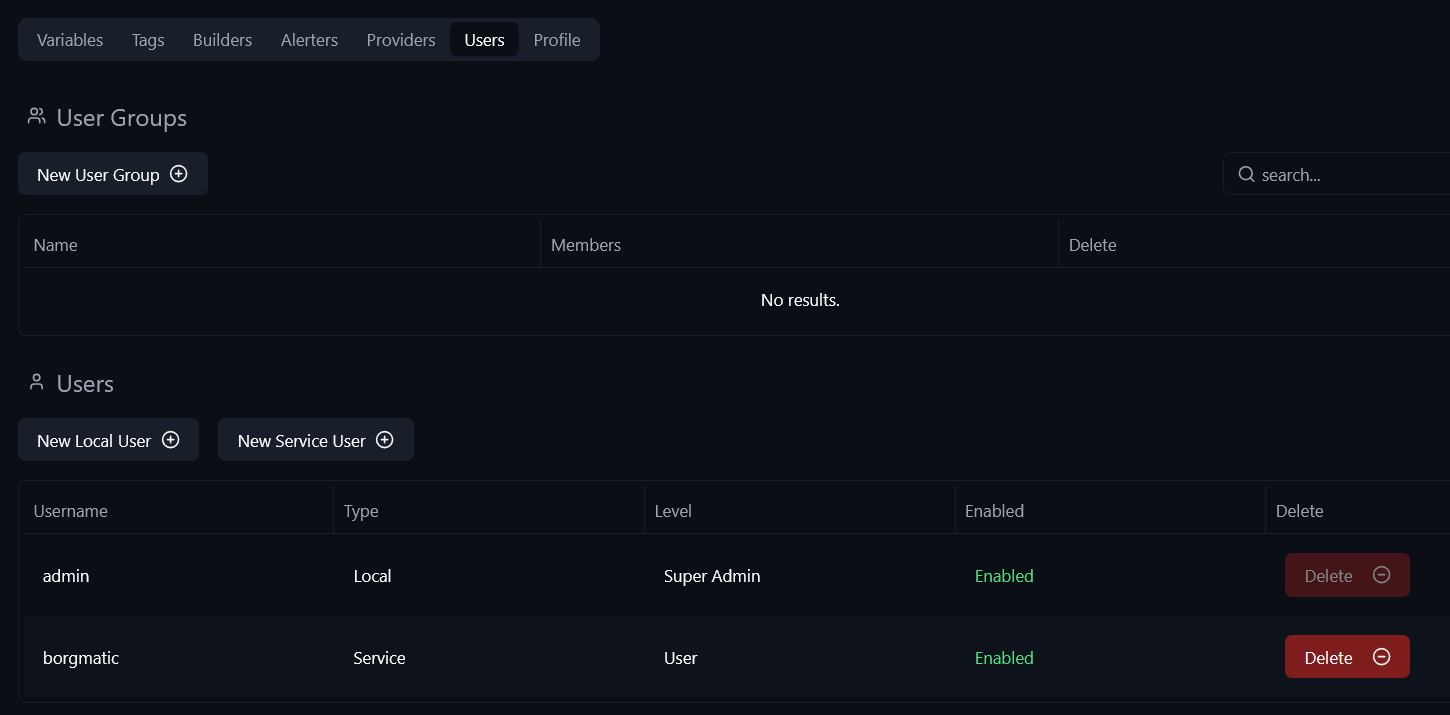

Create a Komodo service user for borgmatic

Before deploying borgmatic image you need to create a service user account in Komodo. This will allow Borgmatic to call Komodo API:

Create an API key for that user:

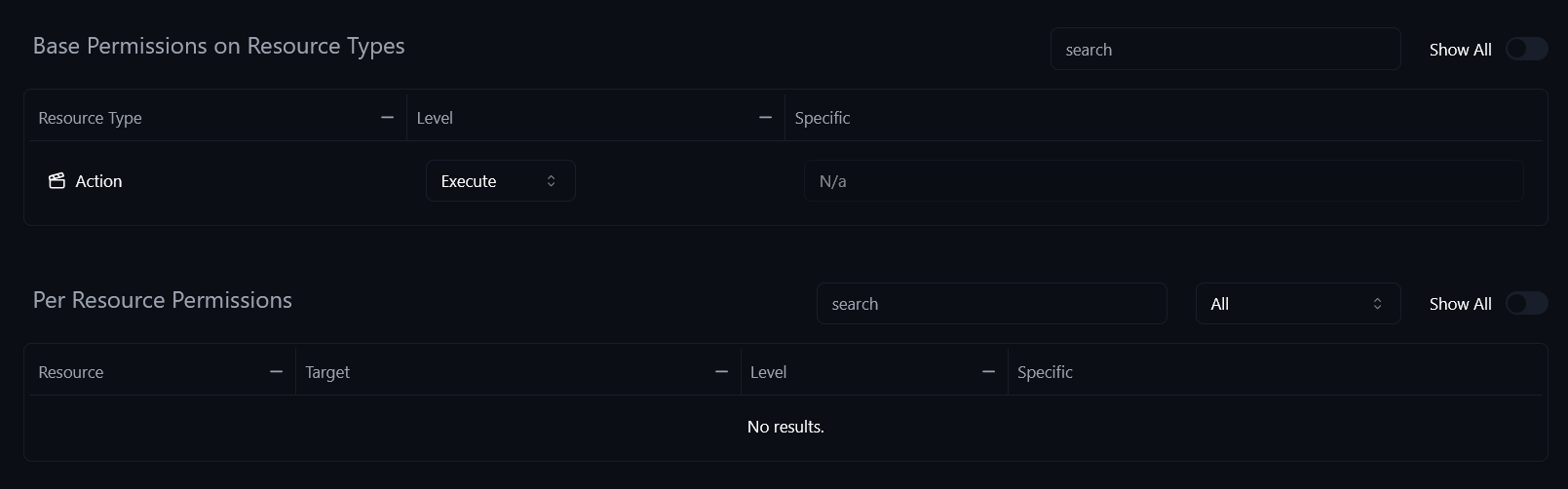

Then give the borgmatic user permission to execute actions (start / stop containers...). You can be more fine-grained but I chose to give execute permission on all actions:

Deploy borgmatic container

Now, we can deploy borgmatic container with the Komodo borgmatic user API keys as environment variables:

services:

borgmatic:

image: ghcr.io/borgmatic-collective/borgmatic

#labels:

# komodo.skip: # Prevent Komodo from stopping with StopAllContainers

volumes:

- $BASE_PATH:/mnt/source:ro # backup source

- $BASE_BACKUP_PATH/appdata-borg:/mnt/borg-repository # backup target

- $BASE_PATH/borgmatic/config:/etc/borgmatic.d/ # borgmatic config file(s) + crontab.txt, see below

- $BASE_PATH/borgmatic/keyfiles:/root/.config/borg # config and keyfiles

- $BASE_PATH/borgmatic/cache:/root/.cache/borg # checksums used for deduplication

environment:

- TZ=${TZ}

- KOMODO_API_KEY=${KOMODO_API_KEY} # for the user we just created

- KOMODO_API_SECRET=${KOMODO_API_SECRET} # for the user we just created

- KOMODO_DB_USERNAME=${KOMODO_DB_USERNAME} # to backup komodo core DB by creating a dump

- KOMODO_DB_PASSWORD=${KOMODO_DB_PASSWORD} # to backup komodo core DB by creating a dump

#- BORG_PASSPHRASE=${BORG_PASSPHRASE} # Repo encryption...

restart: unless-stopped

networks:

- komodo-net # To call Komodo API endpoints locally and backup mongodbdocker-compose.yaml

Here is the crontab file that I use to create a backup every week. I added the verbosity tag to add some information in the docker container logs, that is just a personal preference.

0 3 * * 1 borgmatic --verbosity 1 --list --statscrontab.txt

And here is the borgmatic config I use. You'll see two sections at the bottom where borg calls Komodo API using the user account credentials we created. We call for Komodo actions named auto-stop-stacks and auto-start-stacks. These two actions start or stop the Komodo container stacks depending on their tags. We will see how to create these in the next section.

Also note that I use the docker container name because komodo-core container and borgmatic container are both in the same docker network. I don't use the IP address of Unraid host but the komodo-core container name http://komodo-core:9120/execute.

# List of source directories and files to back up. Globs and tildes

# are expanded. Do not backslash spaces in path names.

source_directories:

- /mnt/source

# A required list of local or remote repositories with paths and

# optional labels (which can be used with the --repository flag to

# select a repository).

repositories:

- path: /mnt/borg-repository

label: backup

# Any paths matching these patterns are excluded from backups.

exclude_patterns:

- /mnt/source/jellyfin/data/metadata # Library images

- /mnt/source/radarr/MediaCover

- /mnt/source/sonarr/MediaCover

- /mnt/source/ollama # AI Models

- /mnt/source/*.log

- /mnt/source/mongodb-komodo # Backup dump is taken below

mongodb_databases:

- name: all

hostname: mongodb-komodo

port: 27017 # Default 27017

username: ${KOMODO_DB_USERNAME}

password: ${KOMODO_DB_PASSWORD}

# https://borgbackup.readthedocs.io/en/stable/usage/prune.html#

keep_daily: 1

keep_monthly: 6

# Name of the consistency check to run:

# * "repository" checks the consistency of the

# repository.

# * "archives" checks all of the archives.

# * "data" verifies the integrity of the data

# within the archives and implies the "archives"

# check as well.

# * "extract" does an extraction dry-run of the

# most recent archive.

checks:

- name: repository

frequency: 2 weeks

- name: archives

frequency: always

- name: extract

frequency: 1 month

- name: data

frequency: 1 month

# Using Apprise for Notifications

apprise:

states:

- start

- finish

- fail

services:

- url: <REDACTED_DISCORD_WEBHOOK>

label: discord

send_logs: false # Send borgmatic logs to Apprise services as part of the "finish", "fail", and "log" states.

start:

title: ⚙️ Container Backup Started

body: Starting borgmatic backup process.

finish:

title: ✅ Container Backup Successful

body: Finished borgmatic backup process.

fail:

title: ❌ Container Backup Failed

body: Container backups have failed.

commands:

# Call komodo to stop all containers backup its DB and commit its config.

# Sleep 5 minutes after the call if successful.

- before: action

when:

- create

run:

- >

curl -sS http://komodo-core:9120/execute

--header "Content-Type: application/json"

--header "X-Api-Key: ${KOMODO_API_KEY}"

--header "X-Api-Secret: ${KOMODO_API_SECRET}"

--data '{ "type": "RunAction", "params": { "action": "auto-stop-stacks" } }'

&& sleep 300 && echo "- Starting Backup"

# Call komodo to restart the containers after a backup.

- after: action

when:

- create

run:

- >

curl -sS http://komodo-core:9120/execute

--header "Content-Type: application/json"

--header "X-Api-Key: ${KOMODO_API_KEY}"

--header "X-Api-Secret: ${KOMODO_API_SECRET}"

--data '{ "type": "RunAction", "params": { "action": "auto-start-stacks" } }' config.yaml

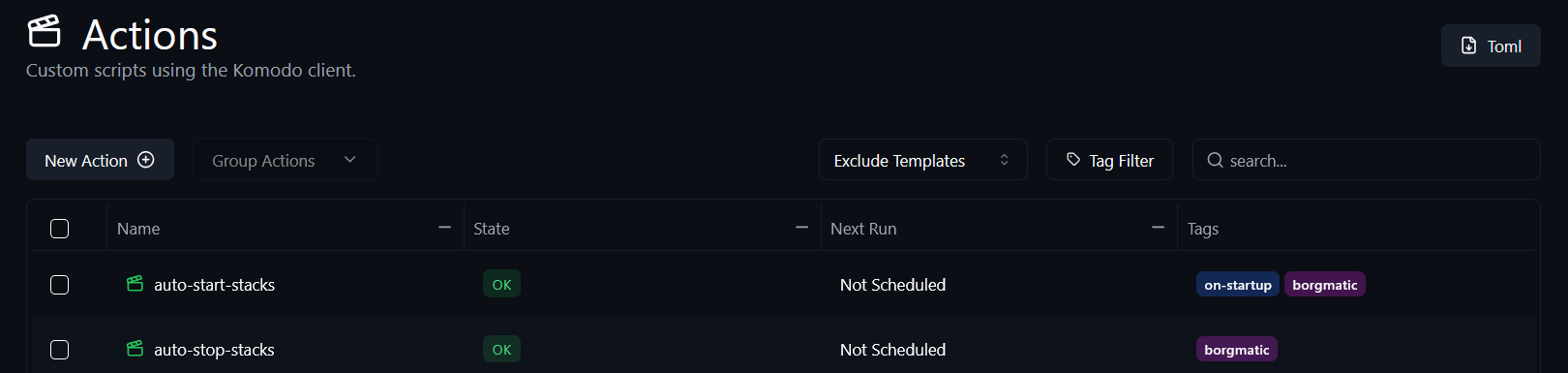

Create auto-start-stacks and auto-stop-stacks actions in Komodo

We have borgmatic running with the appropriate configuration. Borgmatic is calling a Komodo action at the beginning of the routine and one at the end. Let's see how that works.

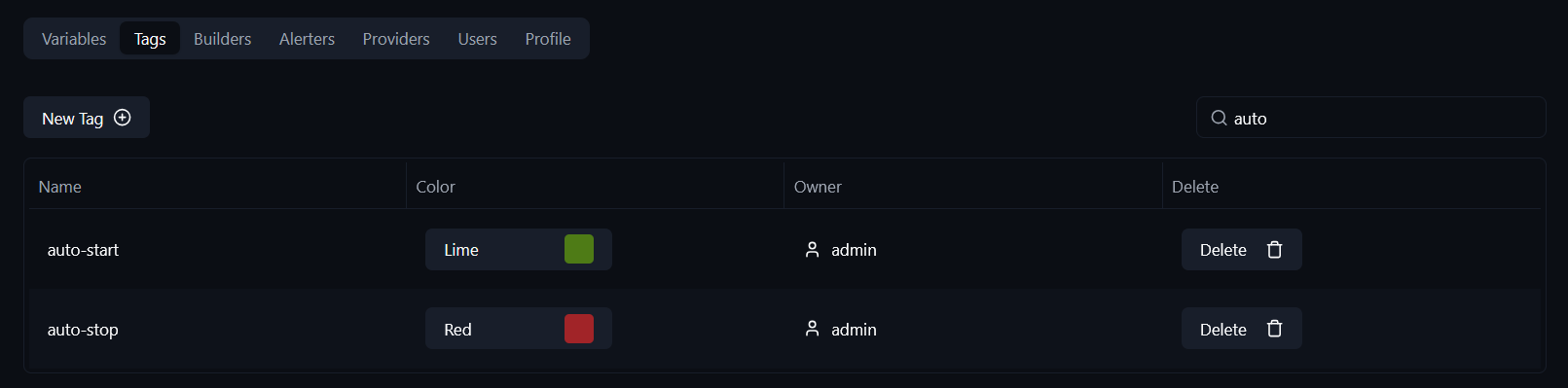

First, create two tags in Komodo:

auto-stop: when applied to a Komodo stack, borg calls to stop it automatically at the beginning of the backup.auto-start: when applied to a Komodo stack, borg calls to start it automatically at the end of the backup.

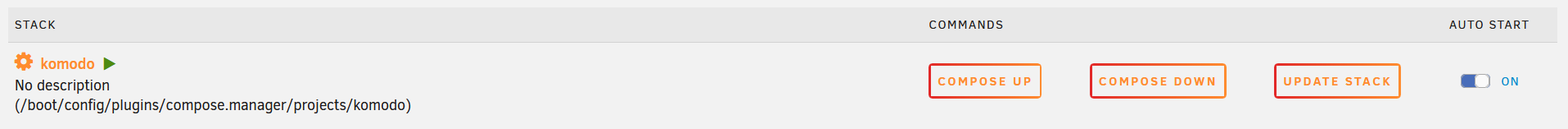

Then, associate the tags with the stacks that need to be stopped and restarted during backups. Most of my stack have both tags. Be careful not to set the auto-stop tag to the stack managing borgmatic or you will kill the container right before the backup.

Some of my containers in my troubleshoot stack are only there to troubleshoot and should be down most of the time. That's why I only have the auto-stop tag, if I ever forget to stop the stack it will not be restarted after the next backup (weekly in my case).

Be careful, template stacks can be tagged but will not pass the tag to its children.

Create two actions in Komodo, one for each tag:

Here is the TypeScript code. You just have to provide at the very top of the script: your tag name, the command and the maximum wait time for the action to complete. The command keywords can be found here. To start the stack, use StartStack and to stop the stack use StopStack... The script is limited to stacks but you can use any action working with these like DeployStack...

// Variables

const TAG = 'auto-start';

const MAX_WAIT_TIME = 10 * 60 * 1000; // 10 minutes

const COMMAND = 'StartStack'

// Error tracking

let errors: any[] = [];

// Helper: ensure a tag exists and return its ID

async function getOrCreateTag(tagName: string) {

const tags = await komodo.read('ListTags', {});

const existing = tags.find(t => t.name === tagName);

if (existing) return existing._id.$oid;

try {

const tagInfo = await komodo.write('CreateTag', { name: tagName });

return tagInfo._id.$oid;

} catch(e) {

console.log(`⚠️ Failed to create tag "${tagName}":`, e);

errors.push(e);

return null;

}

}

// *COMMAND* all stacks with the tag

async function startCommandOnStacks() {

const autoTagId = await getOrCreateTag(TAG);

if (!autoTagId) return;

// Fetch all stacks that have the tag

const stacks = await komodo.read('ListStacks', { query: { tags: [TAG] } });

// Step 1: Fire ALL stop commands and track their Update objects

const updates = await Promise.all(

stacks.map(async (stack) => {

try {

console.log(`🚀 Launching ${COMMAND} on stack "${stack.name}"...`);

const update = await komodo.execute(COMMAND, { stack: stack.id });

return { stack, update };

} catch (e) {

console.log(`⚠️ Failed to launch ${COMMAND} on stack "${stack.name}":`, e);

errors.push(e);

return { stack, update: null }; // Mark as failed

}

})

);

// Step 2: Wait for ALL updates to reach "Complete" status (with timeout)

// See doc : https://docs.rs/komodo_client/latest/komodo_client/entities/update/struct.Update.html

const waitForCompletion = async ({ stack, update }) => {

if (!update) return; // Skip if the command failed earlier

const startTime = Date.now();

while (update.status !== 'Complete') {

// Check for timeout

if (Date.now() - startTime > MAX_WAIT_TIME) {

throw new Error(`Timeout: Stack "${stack.name}" did not succeed to perform ${COMMAND} within ${MAX_WAIT_TIME/1000/60} min`);

}

// Wait before polling again

await new Promise(resolve => setTimeout(resolve, 2000));

update = await komodo.read('GetUpdate', { id: update._id.$oid }); // Refresh update status

console.log(`⏳ "${stack.name}" ${COMMAND} status: ${update.status}`);

}

// Check for failure

if (!update.success) {

throw new Error(`Stack "${stack.name}" failed to to perform ${COMMAND}: ${update.logs.join(', ')}`);

}

console.log(`✅ Stack "${stack.name}" has fully performed ${COMMAND}`);

};

// Wait for all stacks to complete (or fail)

await Promise.all(

updates.map(update =>

waitForCompletion(update).catch(e => {

console.log(`❌ Error performing ${COMMAND} on stack "${update.stack.name}":`, e.message);

errors.push(e);

})

)

);

}

// Execute

await startCommandOnStacks();

if (errors.length > 0) {

throw `⚠️ Encountered ${errors.length} errors during stacks ${COMMAND} commands.`;

}Code for the actions

You should test the action. You'll see something like this if it works correctly.

🚀 Launching StartStack on stack "ai-horse"...

🚀 Launching StartStack on stack "archive"...

🚀 Launching StartStack on stack "auth"...

🚀 Launching StartStack on stack "backup"...

🚀 Launching StartStack on stack "blogging"...

🚀 Launching StartStack on stack "download"...

🚀 Launching StartStack on stack "homeassistant"...

🚀 Launching StartStack on stack "immich"...

🚀 Launching StartStack on stack "karakeep"...

🚀 Launching StartStack on stack "media"...

🚀 Launching StartStack on stack "misc"...

🚀 Launching StartStack on stack "monitoring"...

🚀 Launching StartStack on stack "nextcloud"...

🚀 Launching StartStack on stack "obsidian"...

🚀 Launching StartStack on stack "ollama-ai"...

🚀 Launching StartStack on stack "proxy"...

🚀 Launching StartStack on stack "semaphore"...

⏳ "obsidian" StartStack status: InProgress

⏳ "nextcloud" StartStack status: InProgress

⏳ "karakeep" StartStack status: InProgress

⏳ "archive" StartStack status: InProgress

⏳ "semaphore" StartStack status: InProgress

⏳ "ollama-ai" StartStack status: InProgress

⏳ "download" StartStack status: InProgress

⏳ "proxy" StartStack status: InProgress

⏳ "media" StartStack status: InProgress

⏳ "ai-horse" StartStack status: Complete

✅ Stack "ai-horse" has fully performed StartStack

⏳ "misc" StartStack status: InProgress

⏳ "monitoring" StartStack status: InProgress

⏳ "homeassistant" StartStack status: InProgress

⏳ "auth" StartStack status: InProgress

⏳ "immich" StartStack status: InProgress

⏳ "blogging" StartStack status: InProgress

⏳ "backup" StartStack status: Complete

✅ Stack "backup" has fully performed StartStack

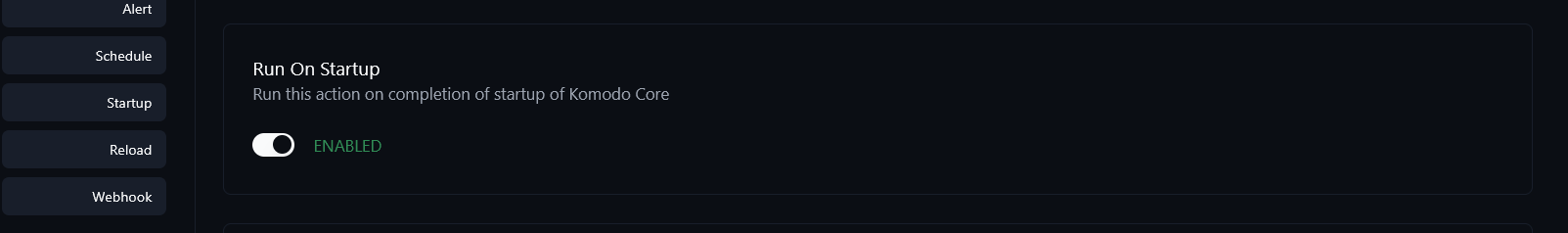

[...]For the auto-start-stack action I also activated the option to execute it as soon as Komodo starts-up. If my server ever shuts down and starts-up again Komodo is started by Unraid auto-start and all the auto-start tagged stacks in Komodo will start too.

Auto-update containers using Komodo

First, a small disclaimer: Auto-updating container images can introduce breaking changes that disrupt your services or worse that may lead to data-loss. Even minor updates can alter behavior, causing crashes, errors, or unexpected downtime... I my case I pin the image version for critical containers (authentik, jellyfin...), it's you call to decide what is critical to you and what isn't worth the risk.

Updating container images is easier as it's kind of already built into Komodo. There may be other ways to do it better but I simply created a Komodo procedure. There is a function called GlobalAutoUpdate your can call from a procedure.

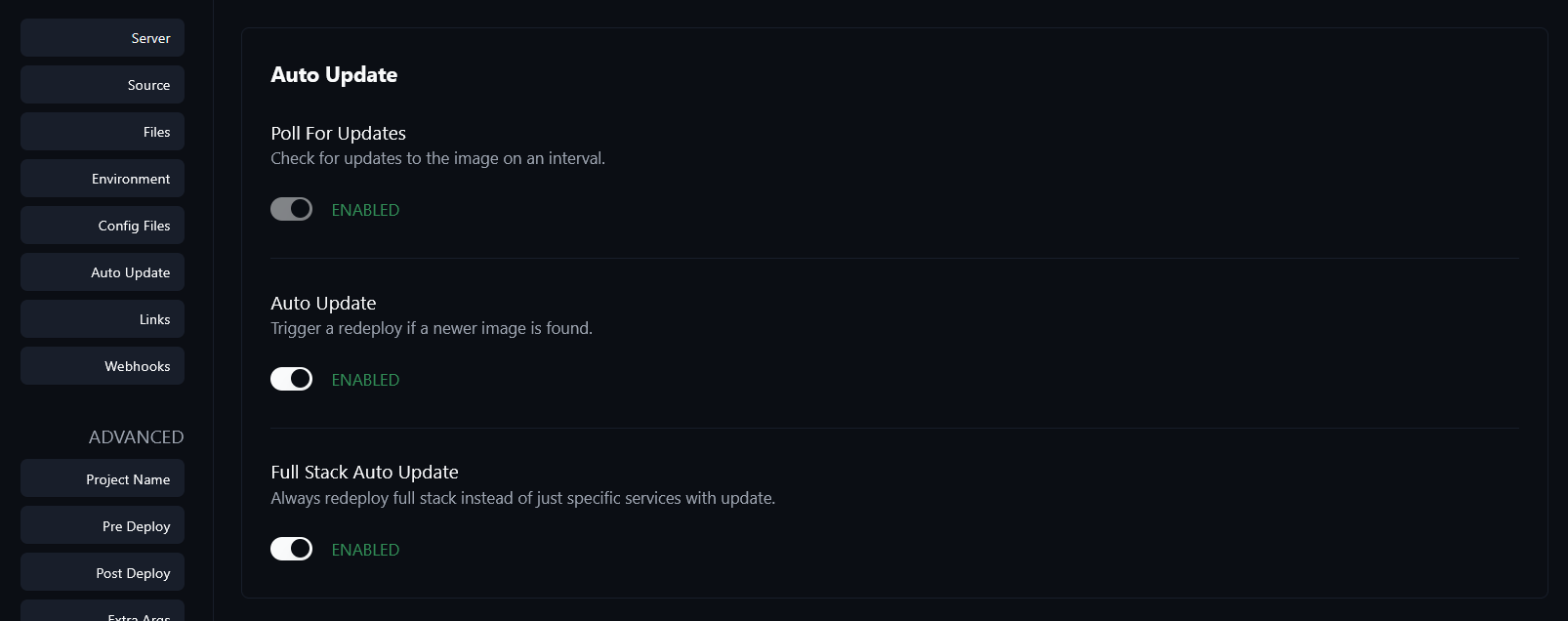

When GlobalAutoUpdate is run, Komodo will loop through all the resources with either of these options enabled, and run PullStack / PullDeployment in order to pick up any newer images at the same tag.

For resources with Poll for Updates enabled and an Alerter configured, it will send an alert that a newer image is available, and display the update available indicator in the UI

For resource with Auto Update enabled, it will go ahead and Redeploy just the services with newer images (by default). If an Alerter is configured, it will also send an alert that this occured.

https://komo.do/docs/resources/auto-update

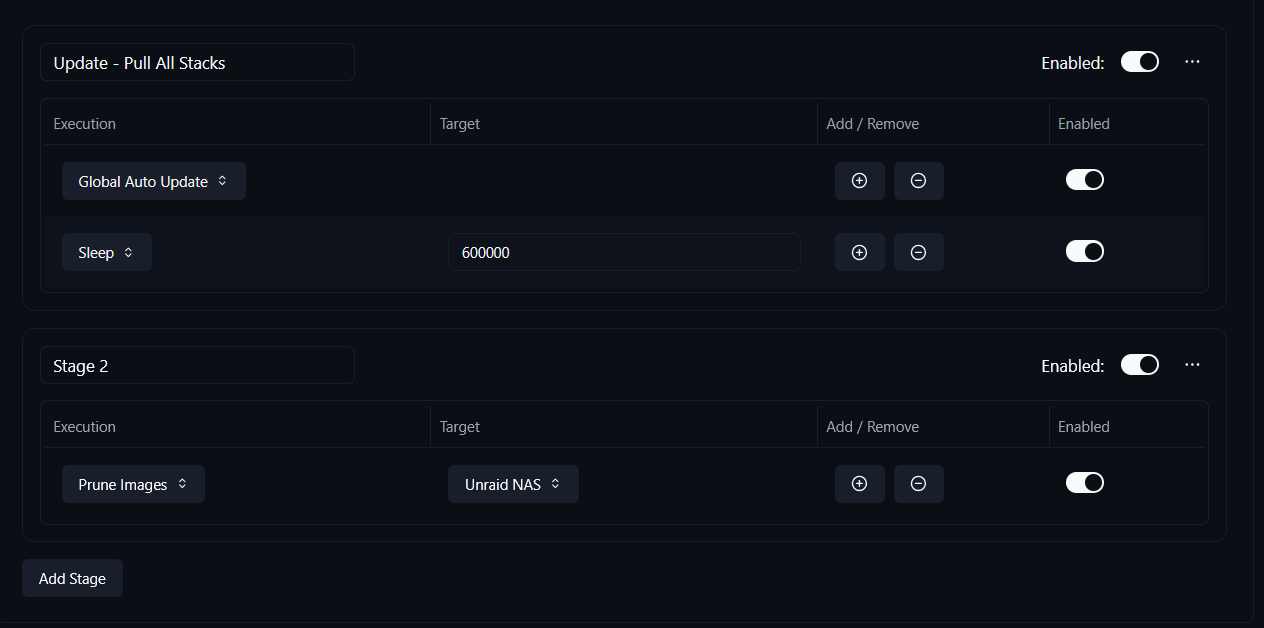

Here is the procedure where I call for the GlobalAutoUpdate, I then wait 10min for the update to finish and then I prune the old images.

If a stack needs to be auto-updated this is the stack configuration you need to setup to make it check for updates and redeploy the images:

The Full Stack Auto Update option is useful if you have dependencies within your stack. For example, I prefer to redeploy authentik server and worker whenever there is an update to prevent errors.

Conclusion

So here we have it ! We successfully recreated Unraid plugin auto-update and auto-backup configurations in Komodo.

It's not the most elegant solution but it's a solution that works and that scales pretty well with many stacks. If you want to change the backup policy and behavior you just have to change borg config.yaml or the tags associated with your Komodo stacks.

Hope this was useful !